The following patent was published July 5, 2012 and concerns a surveillance camera system designed to provide “360-degree, 240-megapixel views on a single screen.” A version of the system was installed at Boston Logan International Airport in 2010 as a trial run sponsored by the Department of Homeland Security. The system was developed by a number of researchers at MIT’s Lincoln Laboratory. Thanks to Kade Ellis of the American Civil Liberties Union of Massachusetts for originally pointing out the patent.

Update: Carlton Purvis of IP Video management has provided several high-resolution photos of the ISIS system.

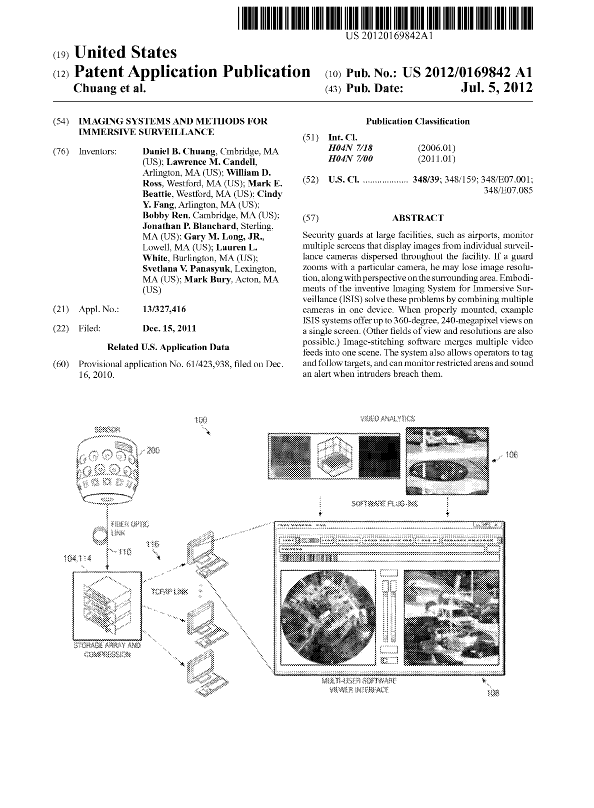

IMAGING SYSTEMS AND METHODS FOR IMMERSIVE SURVEILLANCE

- 65 pages

- July 5, 2012

- 3.3 MB

Security guards at large facilities, such as airports, monitor multiple screens that display images from individual surveillance cameras dispersed throughout the facility. If a guard zooms with a particular camera, he may lose image resolution, along with perspective on the surrounding area. Embodiments of the inventive Imaging System for Immersive Surveillance (ISIS) solve these problems by combining multiple cameras in one device. When properly mounted, example ISIS systems offer up to 360-degree, 240-megapixel views on a single screen. (Other fields of view and resolutions are also possible.) Image-stitching software merges multiple video feeds into one scene. The system also allows operators to tag and follow targets, and can monitor restricted areas and sound an alert when intruders breach them.

…

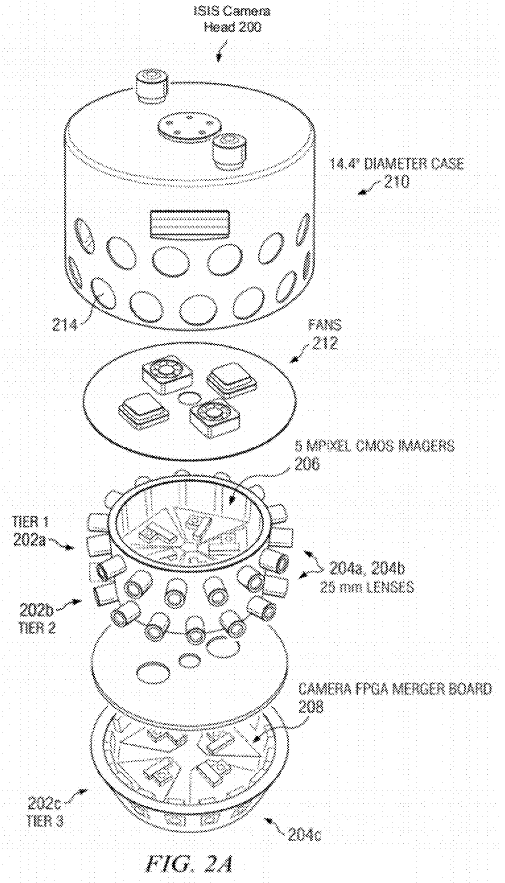

Embodiments of the present invention include a system for monitoring a wide-area scene and corresponding method of monitoring a wide-area system. An illustrative system includes an array of first cameras and an array of second cameras. Each first camera has a first field of view, and each second camera has a second field of view that is different than the first field of view. In another example, the first field of view may be a first angular field of view, and the second field of view may be a second angular field of view that is smaller than the first angular field of view. The array of first cameras and the array of second cameras acquire first imagery and second imagery, respectively, which is used to form an image of the wide-area scene.

An alternative embodiment include a surveillance system comprising an array of first cameras, an array of second cameras, a processor, a server, and an interface. Each first camera has a first angular field of view and is configured to provide respective first real-time imagery of a corresponding portion of the wide-area scene. Similarly, each second camera has a second angular field of view different (e.g., smaller) than the first angular field of view and is configured to provide respective second real-time imagery of a corresponding portion of the wide-area scene. The processor is operably coupled to the array of first cameras and the array of second cameras and is configured to decompose the first real-time imagery and the second real-time imagery into image tiles and to compress the image tiles at each of a plurality of resolutions. The server, which is operably coupled to the processor, is configured to serve one or more image tiles at one of the plurality of resolutions in response to a request for an image of a particular portion of the wide-area scene. An interface communicatively coupled to the server (e.g., via a communications network) is configured to render a real-time image of the wide-area scene represented by the one or more image tiles.

Still another embodiment includes a (computer) method of compressing, transmitting, and, optionally, rendering image data. A processor decomposes images into image tiles and compresses the image tiles at each of a plurality of resolutions. The processor, or a server operably coupled to the processor, serves one or more image tiles at one of the plurality of resolutions in response to a request for an image of a particular portion of the wide-area scene. Optionally, an interface communicatively coupled to the server (e.g., via a communications network) renders a real-time image of the wide-area scene represented by the one or more image tiles.

Yet another embodiment includes a (computer) method of determining a model representing views of a scene from cameras in an array of cameras, where each camera in the array of cameras has a field of view that overlaps with the field of view of another camera in the array of cameras. For each pair of overlapping fields of view, a processor selects image features in a region common to the overlapping fields of view and matches points corresponding to a subset of the image features in one field of view in the pair of overlapping fields of view to points corresponding the subset of the image features in the other field of view in the pair of overlapping fields to form a set of matched points. Next, the processor merges at least a subset of each set of the matched points to form a set of merged points. The processor then estimates parameters associated with each field of view based on the set of merged points to form the model representing the views of the scene.

Still another embodiment includes a (computer) method of compensating for imbalances in color and white levels in color images of respective portions of a wide-area scene, where each color image comprises red, green, and blue color channels acquired by a respective camera in a camera array disposed to image the wide-area scene. A processor normalizes values representing the red, green, and blue color channels to a reference value representing a response of the cameras in the camera array to white light. The processor equalizes the values representing the red, green, and blue color channels to red, green, and blue equalization values, respectively, then identifies high- and low-percentile values among each of the red, green, and blue color channels. The processor scales each of the red, green, and blue color channels based on the high- and low-percentile values to provide compensated values representing the red, green, and blue color channels.

A yet further embodiment comprises a calibration apparatus suitable for performing white and color balancing of a sensor array or camera head. An illustrative calibration apparatus includes a hemispherical shell of diffusive material with a first surface that defines a cavity to receive the sensor array or camera head. The illustrative calibration apparatus also includes a reflective material disposed about a second surface of hemispherical shell of diffusive material. One or more light sources disposed between the hemispherical shell of diffusive material and the reflective material are configured to emit light that diffuses through the hemispherical shell of diffusive material towards the cavity.

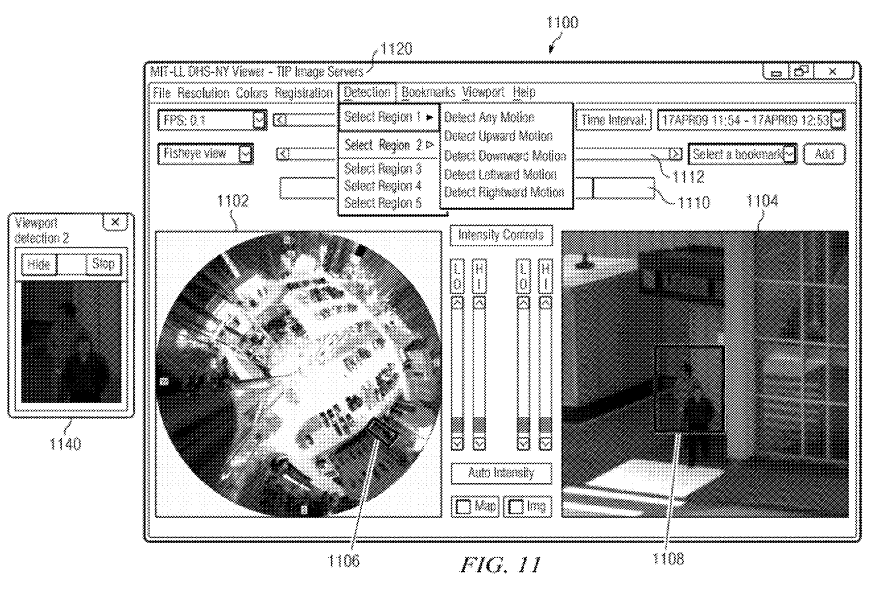

A further embodiment includes an interface for a surveillance system that monitors a scene. The interface may include a full-scene view configured to render a real-time panoramic image of the entire scene monitored by the surveillance system and a zoom view configured to render a close-up of a region of the panoramic view. In at least one example, the full-scene view and/or the zoom view may display a pre-warped image. An illustrative interface may optionally be configured to enable a user to select a region of the scene in the full-scene view for display in the zoom view. An illustrative interface may also be configured to enable a user to set a zone in the panoramic image to be monitored for activity and, optionally, to alert the user upon detection of activity in the zone. The illustrative interface may further populate an activity database with an indication of detected activity in the zone; the illustrative interface may also include an activity view configured to display the indication of detected activity to the user in a manner that indicates a time and a location of the detected activity and/or to display images of detected activity in the full-scene view and/or the zoom view. An exemplary interface may be further configured to track a target throughout the scene and to display an indication of the target’s location in at least one of the full-scene view and the zoom view, and, further optionally, to enable a user to select the target.

It should be appreciated that all combinations of the foregoing concepts and additional concepts discussed in greater detail below (provided such concepts are not mutually inconsistent) are contemplated as being part of the inventive subject matter disclosed herein. In particular, all combinations of claimed subject matter appearing at the end of this disclosure are contemplated as being part of the inventive subject matter disclosed herein. It should also be appreciated that terminology explicitly employed herein that also may appear in any disclosure incorporated by reference should be accorded a meaning most consistent with the particular concepts disclosed herein.

…