Massive Cloud Auditing Using Data Mining on Hadoop

- 15 pages

- Distribution Statement C

- July 11, 2011

Massive Cloud Auditing

- Cloud Auditing of massive logs requires analyzing data volumes which routinely cross the peta-scale threshold.

- Computational and storage requirements of any data analysis methodology will be significantly increased.

- Distributed data mining algorithms and implementation techniques needed to meet scalability and performance requirements entailed in such massive data analyses.

- Current distributed data mining approaches pose serious issues in performance and effectiveness in information extraction of cloud auditing logs.

- Reasons include scalability, dynamic and hybrid workload, high sensitivity, and stringent time constraints.

…

Traffic Characterization

- Cloud Traffic logs accumulated from diverse and geographically disparate sources.

- Sources include stored and live traffic from popular web applications: Web and Email

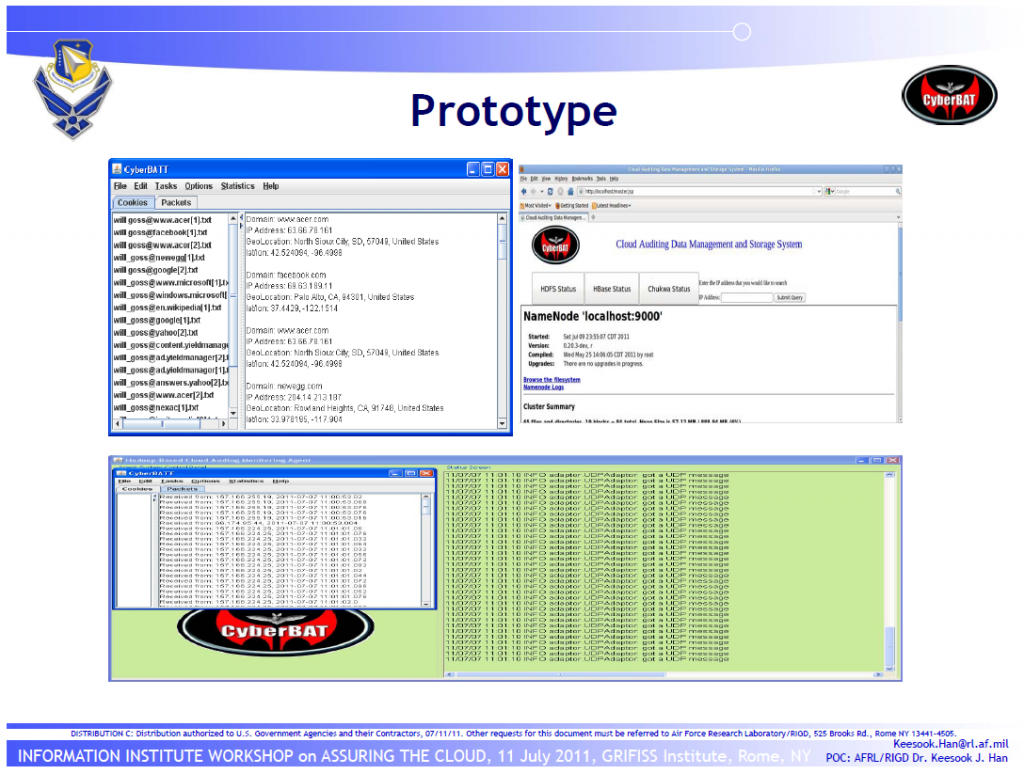

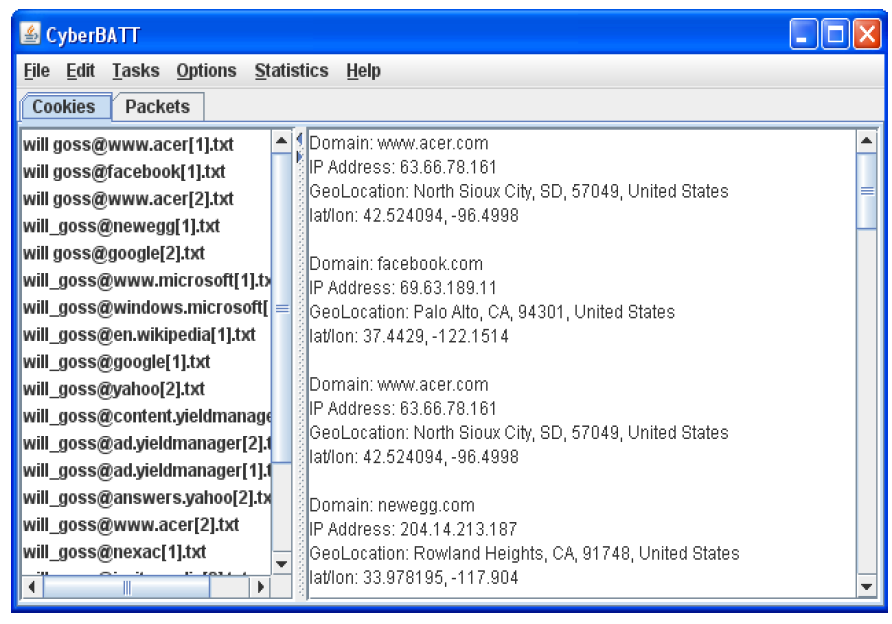

- Live Packet Capture from packet sniffing tools (Wireshark)

- Honeypot traffic from UTSA comprising of malicious traffic.

- Augment traffic information with IP,DNS, geolocation analysis procured from publicly available datasets and high level network and flow statistics.

- IP geolocation from public databases retrieve the name and street address of the organization which registered the address block. For large ISPs the registered street address usually differs from the real location of its hosts.

- Measurement based IP geolocation utilize active packet delay measurements to approximate the geographical location of network hosts.

- Secure IP geolocation to defend against adversaries manipulating packet delay measurements to forge locations

- Traffic characterization will generate massive amount of data. Need for distributed data storage.

…

Online Data Mining

- Develop data mining algorithms that work in a massively parallel and yet online fashion for mining of large data streams

- Reducing time between query submission and obtaining results.

- Overall speed of query processing depends critically on the query response time

- Map-Reduce programming model used for fault-tolerant and massively parallel data crunching .

- But Map-Reduce implementations work only in batch mode and do not allow stream processing or exploiting of preliminary results.

…